I have been working with my own custom build system, and it has been working really well for me while building C++ code. As I have been adding the capability to build shaders, however, I have run into several problems, some of which I don’t have good solutions for. Most of them are curiously interrelated, but the biggest single problem could be summarized as:

The

#includefile dependencies aren’t known until a shader is compiled, and there isn’t a great mechanism for getting that information back to the build system

This post will discuss some of the individual problems and potential solutions.

How Dependencies are Handled Generally

I have a scheme for creating files with information about the last successful execution of a task. There is nothing clever or special about this, but here is a simple example:

{

"version":1,

"hash":"2ade222a1aff526d0d4df0a2f5849210",

"paths":[

{"path":"Engine\\Type\\Arithmetic.test.cpp","time":133783428404663533},

{"path":"temp\\win64\\unoptimized\\intermediate\\jpmake\\Precompiled\\Precompiled_common.c843349982ad311df55b7da4372daa2d.pch","time":133804112386989788},

{"path":"C:\\Program Files\\Microsoft Visual Studio\\2022\\Community\\VC\\Tools\\MSVC\\14.41.34120\\bin\\Hostx64\\x64\\cl.exe","time":133755106284710903},

{"path":"jpmake\\IDE\\VS2022\\temp\\x64\\Release\\output\\jpmake\\jpmake.exe","time":133804111340658384}

]

}This allows me to record 1) which files a specific target depends on, 2) what the last-modified times of each file was the last time that the task with the target as an output was executed, and 3) a hash value that contains any other information that should cause the task to be executed again if something changes.

This has worked well for all of the different kinds of tasks that I have added so far and it usually corresponds pretty closely what the user has manually specified. The one big exception has been C++ compilation in which case things are more complicated because of #include directives. Rather than require the user to specify individual files that are #included (and then transitive files that those files #include) the build system instead uses a command argument to have MSVC’s compiler create a file with the dependencies and then that file’s contents are added to the dependency file that my build system uses.

This is possible because I have made building C++ a specialized kind of task where I have implemented everything; this means that the Lua interface that the user (me) worries about is designed to be easy-to-use and then the build system takes care of all of the annoying details.

What about Shaders?

My mental goal has been to design the build system generally so that any kind of task can be defined, and that Lua is used for the project files so that any kind of programming can be done to define these tasks. I always knew that I would make some kind of specialized tasks that could be considered common (copying files is the best example of this), but the aspirational design goal would be to not require specialized tasks for everything but instead let the user define them.

The main motivation behind this design goal was so that “assets” could be handled the same way as code. I wanted to be able to build asset-building programs and then use them to build assets for a game, and I didn’t want to have to create specialized task types for each of these as part of the build system (the build system should be able to be used with any project, with no knowledge about my specific game engine).

Building shaders as the first kind of asset type has revealed a problem that is obvious but that I somehow didn’t fully anticipate: How to deal with implicit dependencies, specifically #include directives, when the task type is just an arbitrary command with arguments that the build system has no specialized knowledge of?

Dependency Callback

Like its C++ cl.exe compiler, Microsoft’s dxc.exe shader compiler can output a file with dependencies (e.g. the #include files). My current scheme is to allow the user to specify a callback that is called after a command execution succeeds and which returns any dependencies that can’t be known until after execution; this means that the callback function can parse and extract the data from the dependencies file that dxc.exe outputs and then report that data back to the build system. Here is currently how that can be done for a single shader:

local task_engineShaders = CreateNamedTask("EngineShaders")

local path_input = ResolvePath("$(engineDataDir)shaders.hlsl")

do

local dxc_directory = g_dxc_directory

local path_output_noExtension = ResolvePath("$(intermediateDir_engineData)shader_vs.")

local path_output = path_output_noExtension .. "shd"

local path_dependenciesFile = path_output_noExtension .. "dep.dxc"

task_engineShaders:ExecuteCommand{

command = (dxc_directory .. "dxc.exe"), dependencies = {(dxc_directory .. "dxcompiler.dll"), (dxc_directory .. "dxil.dll")},

inputs = {path_input},

outputs = {path_dependenciesFile},

arguments = {path_input,

"-T", "vs_6_0", "-E", "main_vs", "-fdiagnostics-format=msvc",

"-MF", path_dependenciesFile

},

postExecuteReturnDependenciesCallback = ReturnShaderDependencies, postExecuteReturnDependenciesCallbackUserData = path_dependenciesFile

}

local path_debugSymbols = path_output_noExtension .. "pdb"

local path_assembly = path_output_noExtension .. "shda"

task_engineShaders:ExecuteCommand{

command = (dxc_directory .. "dxc.exe"), dependencies = {(dxc_directory .. "dxcompiler.dll"), (dxc_directory .. "dxil.dll"), path_dependenciesFile},

inputs = {path_input},

outputs = {path_output},

arguments = {path_input,

"-T", "vs_6_0", "-E", "main_vs", "-fdiagnostics-format=msvc",

"-Fo", path_output, "-Zi", "-Fd", path_debugSymbols, "-Fc", path_assembly,

},

artifacts = {path_debugSymbols},

postExecuteReturnDependenciesCallback = ReturnShaderDependencies, postExecuteReturnDependenciesCallbackUserData = path_dependenciesFile

}

endThe first problem that is immediately noticeable to me is that this is a terrible amount of text to just build a single shader. We will return to this problem later in this post, but note that since this is Lua I could make any convenience functions I want so that the user doesn’t have to manually type all of this for every shader. For the sake of understanding, though, the code above shows what is actually required.

The source path is specified on line 2, the compiled output path on line 6, and then the dependencies file path that DXC can output on line 7. Two different commands are submitted for potential execution, on lines 8 and 20, and then line 29 shows how the callback is specified (there is a function and also some “user data”, a payload that is passed as a function argument).

Why are there two different commands? Because DXC requires a separate invocation in order to generate the dependency file using -MF. I found this GitHub issue where this is the behavior that was specifically requested (although someone else later in the issue comments writes that they would prefer a single invocation, but I guess that didn’t happen). This behavior is annoying for me because it requires two separate sub tasks, and it is kind of tricky to figure out the dependencies between them.

Here is what the callback function looks like:

do

local pattern_singleInput = ":(.-[^\\]\r?\n)"

local pattern_singleDependency = "%s*(.-)%s*\\?\r?\n"

local FindAllMatches = string.gmatch

--

ReturnShaderDependencies = function(i_path_dependenciesFile)

local o_dependencies = {}

-- Read the dependencies file

local contents_dependenciesFile = ReadFile(i_path_dependenciesFile)

-- Iterate through the dependencies of each input

for dependencies_singleInput in FindAllMatches(contents_dependenciesFile, pattern_singleInput) do

-- Iterate through each dependency of a single input

for dependency in FindAllMatches(dependencies_singleInput, pattern_singleDependency) do

o_dependencies[#o_dependencies + 1] = dependency

end

end

return o_dependencies

end

endFirst, the good news: It was pretty easy to write code to parse the dependencies file and extract the desired information, which is exactly what I was hoping for by using Lua.

Unfortunately, there is more bad news than good.

The reason that there is a separate callback and then user data (rather than using the dependencies file path as an upvalue) is so that there can be just a single function, saving memory. I also discovered a way to detect if the function had changed using the lua_dump() function, and this seemed to work well initially. I found, however, that lua_dump() doesn’t capture upvalues (which makes sense after reading about it, because upvalues can change), which means that there is a bug in the callback I show above: If I change the patterns on line 2 or line 3 it won’t trigger the task to execute again because I don’t have a way of detecting that a change happened. In this specific case this is easy to fix by making the strings local to the function (and, in fact that’s probably better anyway regardless of the problem I’m currently discussing), but it is really discouraging to realize that there is this inherent problem; I don’t want to have to remember any special rules about what can or can’t be done, and even worse I don’t want dependencies to silently not work as expected if those arcane rules aren’t followed.

There is another fundamental problem with using a Lua function as a callback: It means that there must be some kind of synchronization between threads. The build system works as follows:

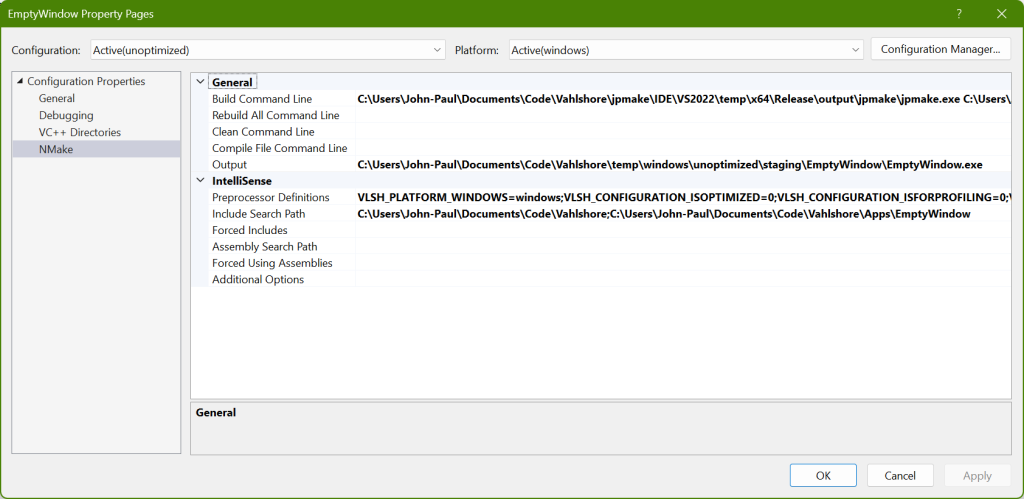

- Phase 1: Gather all of the info (reading the Lua project file and storing all of the information needed to determine whether tasks need to be executed and to execute them)

- During this phase all data is mutable, and everything is thus single-threaded

- Phase 2: Iterate through each task, deciding whether it must be executed and executing it if necessary

- During this phase all data is supposed to be immutable, and everything can thus be multi-threaded

- (As of this post everything is still single-threaded because I haven’t implemented different threads yet, but I have designed everything to be thread safe looking towards the future when tasks can be executed on different threads simultaneously)

The problem with using Lua during Phase 2 is that it violates these important design decisions. If Phase 2 were multi-threaded like it is intended to be then there would be a bad bug if two different threads called a Lua function at the same time. I don’t think that the problem is insurmountable: There is a lua_newthread() function that can be used to have a separate execution stack, but even with that there are some issues (e.g. if I assume that all data is immutable then I could probably get away without doing any locking/unlocking in Lua, but I don’t have any way of enforcing that if I allow arbitrary functions to be used as callbacks (a user could do anything they wanted, including having some kind of persistent state that gets updated every time a Lua function is called), which again involves arcane rules that aren’t enforceable but could cause bad bugs).

What I really want is to allow the user to use as much Lua as they want for defining tasks and dependencies in Phase 1, but to never use it again once Phase 2 starts. But, even though that’s the obvious ideal situation, how is it possible to deal with situations where dependencies aren’t known until execution and they must be reported?

Possible Solutions

Unfortunately, I don’t have good solutions yet for some of these problems. I do have a few ideas, however.

Automatic Dependencies

My dream change that would help with a lot of these problems would be to have my build system detect which files are read when executing a task so that it can automatically calculate the dependencies.

Look again at one of the lines where I am defining the shader compilation task:

command = (dxc_directory .. "dxc.exe"), dependencies = {(dxc_directory .. "dxcompiler.dll"), (dxc_directory .. "dxil.dll")},The user specifies dxc.exe, which is good because that’s the program that must run. But then it’s frustrating that dxcompiler.dll and dxil.dll also have to be specified; I only happen to know that they are required because they come bundled with dxc.exe, but how would I know that otherwise? There are certainly other Windows DLLs that are being used by these three that I don’t have listed here, and why should I have to know that? Even if I took the time to figure it out a single time is it also my responsibility to track this every time that there is a DXC update?

There is a build system named tup that takes care of figuring this out automatically, but although I have looked into this several times it doesn’t seem like there is an obvious way to do this (at least in Windows). I think the general method that would be required is 1) create a new process but with its main thread suspended, 2) hook any functions in that process that are relevant, 3) resume the main thread. The hooking step, though, seems tricky. It also seems difficult to differentiate between file accesses that should be treated as dependencies and ones that are outputs; I think maybe if there is any kind of write permission then it could be treated as not-a-dependency, but I haven’t fully thought that through.

I have looked into this automatic dependency calculation several times and every time given up because it looks like too big of a task. If I were working full time on the build system I think this is what I would do, but since my main goal currently is the game engine it has been too hard for me to justify trying to make this work. Having said that, it seems like the only way to handle these problems that would satisfy me as a user, and so maybe some day I will dive in and try to make it work.

Two Invocations of DXC.exe

Without automatic dependency calculation then it seems required to have two different invocations of dxc.exe. The solution to this from the standpoint of a nice build project file seems, at least, pretty obvious: Write a custom tool with my own command arguments which could internally execute dxc.exe twice, but that would only be run once from the perspective of the build system. Having a wrapper tool like this would also mean that I could create my own command arguments, with a related benefit that the same program and same arguments could be called for any platform (and the wrapper tool could then do the appropriate thing).

Avoiding Lua Callbacks

One idea I have had is that an execute-command task could be required to create its own dependency file. If I am already going to write some kind of shader compiler wrapper program around dxc.exe then I could also have it load and parse the dependency file and output a different dependency file in the format that my build system wants. This has some clear disadvantages because it means a user is expected to write similar wrapper tools around any kind of command that they want to execute, which is obviously a huge burden compared to just writing a Lua function. I think the advantage is that it would avoid all of the potential problems with violating confusing rules about what can or can’t be done in a Lua function in my build system, and that is attractive to avoid silent undetectable bugs, but it certainly doesn’t fit in with the ergonomics that I imagined.

I need to think about this more and hope that I come up with better ideas.

Specialized Shader Tasks

A final solution would be for me to just make a specialized task type for building shaders, just like I have for C++. Then I could write it all in C++ code, try to make it as efficient as possible, and it would accomplish the same end goal of letting me specify configuration and making it cross platform just like a separate “wrapper” program would do, but it would be part of the build system itself (rather than requiring any user of the build system to do the same thing). This avoids the fundamental problems with arbitrary commands but those problems will eventually have to be solved for other asset types; I think it might be worth doing, however, because shaders are such an important kind of asset.

The big reason I hesitate to do this (besides the time that it would take to do) is that there is a lot of custom code that can be built up around shaders. My engine is still incredibly basic and so I have just focused on compiling a hand-written shader source file, but eventually (if I have time) the shader source files will be generated somehow, and there could well be a very involved process for this and for getting reflection data and for generating accompanying C++ code. Knowing this and thinking about past systems that I have built at different jobs I am not sure if it makes sense to spend time making a system focused on dxc.exe that I might not end up using; maybe I will end up with a “wrapper” program anyway that does much more than just compiling shaders.

Update: Improving User API with Lua

I alluded to this in the original post but didn’t give an example. Since Lua is used to define how things get built it is possible to hide many of the unpleasant boilerplate details of a task (e.g. a task to build shaders) behind nice abstractions. The following is an example of something that I came up with:

local task_engineShaders = CreateNamedTask("EngineShaders")

local path_input = "$(engineDataDir)shaders.hlsl"

local path_output_vs, path_output_ps

do

local entryPoint = "main_vs"

local shaderType = "vertex"

local path_intermediate_noExtension = "$(intermediateDir_engineData)shader_vs."

path_output_vs = path_intermediate_noExtension .. "shd"

BuildShader(path_input, entryPoint, shaderType, path_output_vs, path_intermediate_noExtension, task_engineShaders)

end

do

local entryPoint = "main_ps"

local shaderType = "pixel"

local path_intermediate_noExtension = "$(intermediateDir_engineData)shader_ps."

path_output_ps = path_intermediate_noExtension .. "shd"

BuildShader(path_input, entryPoint, shaderType, path_output_ps, path_intermediate_noExtension, task_engineShaders)

endThis code builds two shaders now (a vertex and a pixel/fragment shader), compared to the single shader shown previously in this post, but it is still much easier to read because most of the unpleasantness is hidden in the BuildShader() function. This function isn’t anything special in the build system but is just a Lua function that I defined in the project file itself. This shows the promise of why I wanted to do it this way, because it makes it very easy to build abstractions with a fully-powered programming language.