I wanted to try and see what it looked like to only have a single primary ray for each pixel but to change the offset of the intersection within that pixel randomly every frame. The results have to be seen in motion to properly evaluate, but below is an example of a single frame:

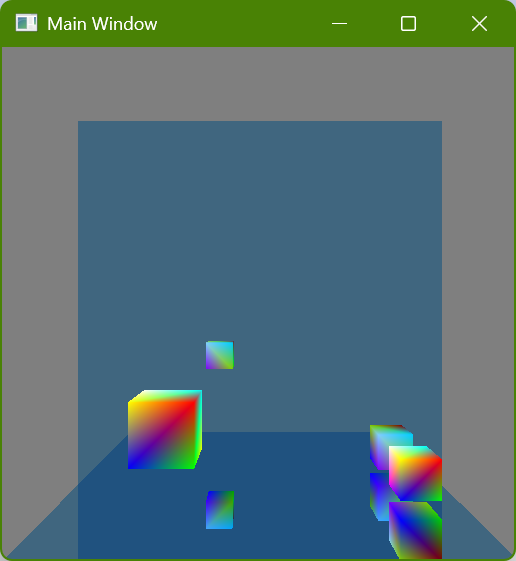

A gif also doesn’t properly show the effect because the framerate is too low, but it can give a reasonable idea:

If you want to see it in action you could download the program. The controls are:

- Arrow keys to move the block

- (I to move it away from you and K to move it towards you)

- WASD to move the camera

The program relies on several somewhat new features, but if you have a GPU that supports ray tracing and you have a newish driver for it then the program will probably work. Although the program does error checking there is no mechanism for reporting errors; this means that if you try to run it but it immediately exits out then it may be because your GPU or driver doesn’t support the required features.

Samples and Filtering

In a previous post where I showed ray tracing the rays were intersecting the exact center of every pixel and that resulted in the exact same kind of aliasing that happens with standard rasterization (and for the same reason). This can be fixed by taking more samples (i.e. tracing more rays) for each pixel and then filtering the results but it feels wasteful somehow to trace more primary rays when the secondary rays are where I really want to be making the GPU work. I wondered what it would look like to only trace a single ray per-pixel but to adjust the sample position differently every frame, kind of similar to how dithering works.

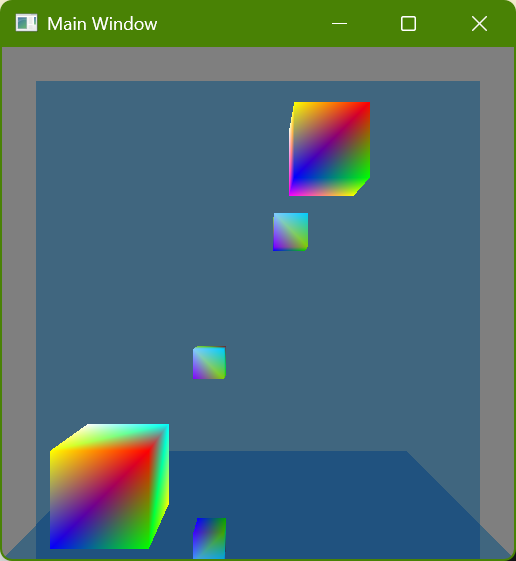

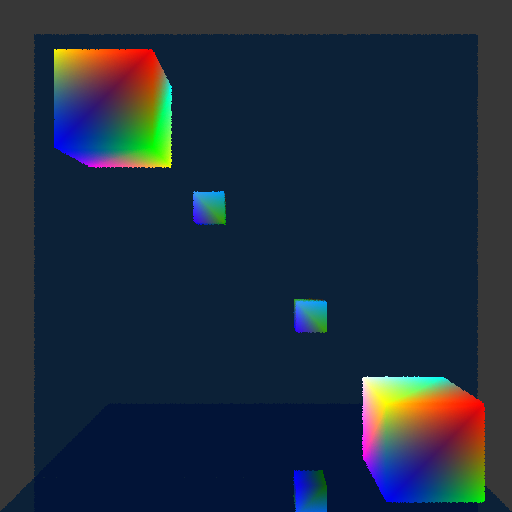

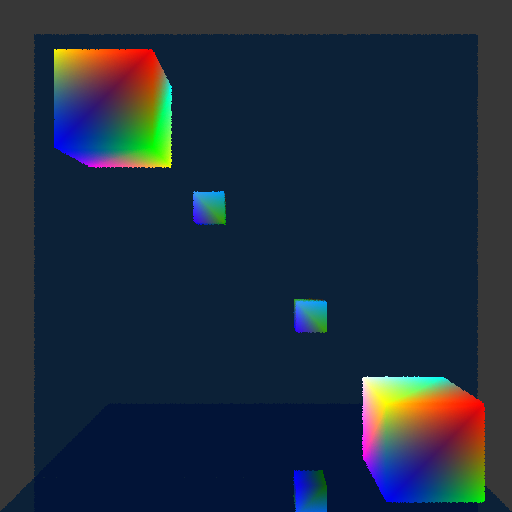

You can see a single frame capture of what this looks like below:

This is displaying linear color values as if they were sRGB gamma-encoded, which is why it looks darker (it is a capture from PIX of an internal render target). Ignore the difference in color luminances, and instead focus on the edges of the cubes (and the edges of the mirror, too, although the effect is harder to see there). Every pixel will only show an intersection with a single object, and if the area around that pixel contains an object’s edge then by changing where the intersection happens in that area sometimes a pixel will show one object and sometimes another object. (Side note: Just to be clear, this effect happens at every pixel and not just edges of objects. If you look closely you might be able to tell that the color gradients also have noise in them. The edges of objects, however, is where the results of these jittered ray intersections are really noticeable.)

In a single frame this makes the edges look bumpy. In a sequence of frames (like when the program is being run) the effect is more noisy; the edges still feel kind of bumpy but the bumpiness changes every frame. I had hoped that it might look pretty good in motion but unfortunately it is pretty distracting and doesn’t feel great.

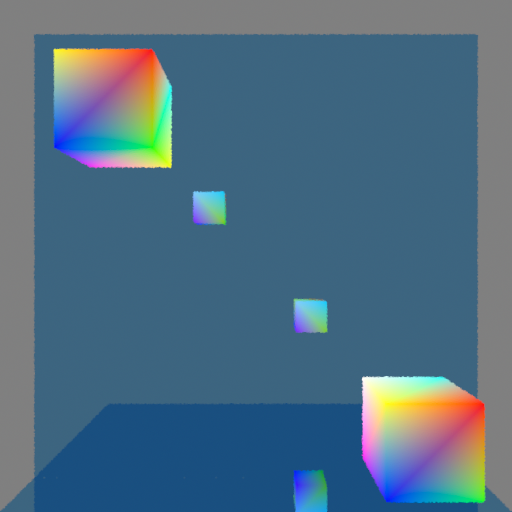

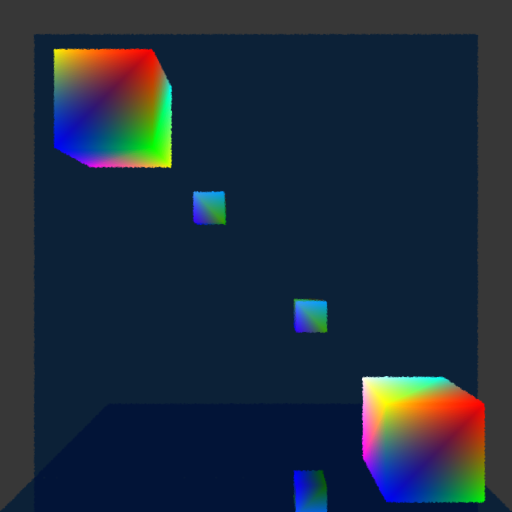

Even with only a single intersection per pixel, however, it is possible to use samples from neighboring pixels in order to filter each pixel. That means that you could have up to nine samples for each pixel, even though only a single one of those nine samples would actually be inside of the pixel’s area. Let me repeat the preceding screenshot but then also show the version that is using filtering with the neighboring eight pixels so that you can compare the two versions next to each other:

The same bumpiness contours are still there, but now they have been smoothed out. This definitely looks better, but it also makes the edges feel blurry (which makes sense because that is exactly what is happening). The real question is how does it look in motion?

Unfortunately this also didn’t look as good as I had hoped. It definitely does function like antialiasing, which is great, and you no longer see discreet lines of pixels appearing or disappearing as the cubes get closer or further from the camera. At the same time, the noise is more obvious than I had hoped. I think it is the kind of visual effect that some people won’t mind and will stop to notice but that other people will really find objectionable and constantly distracting. Again, if you’re interested, I encourage you to download the program yourself and try it to see what you think!

Is it Usable?

For now I am going to keep it. I like the idea of doing antialiasing “properly” and I think it will be interesting to see how this performs with smaller details (by moving the cubes very far from the camera I can get some sense for this, but I’d like to see it with actual detailed objects). There is also an obvious path for improvement by adding more samples per pixel, although computational expense may not allow that.

I haven’t looked into denoisers beyond a very cursory understanding and so it’s not clear to me whether denoising would make this problem better or whether using this technique would actually inhibit denoisers being able to work well.

I personally kind of like a bit of noise because it makes things feel more analog and real to me, but I realize that this is an individual quirk of mine that is far from universal (as evidenced by the loud complaints I read online when games have added film grain). Although the effect ends up being more distracting than I had hoped (I can’t claim that I would intentionally make it look like this if I had a choice) I still think it could potentially work as kind of an aesthetic with other noise. This will have to be evaluated further, of course, as I add more ray tracing effects with noise and create more complicated scenes with more complicated geometry.