This post expands on ideas that were introduced in the Frame Pacing in a Very Simple Scene post, and that earlier post should probably be read and understood before this one in order to have context into what the profiler screenshots mean.

To skip to the images find the Visualizing in a Profiler section.

To skip to the sample programs find the Example Programs section or download them here.

In the previous post I had used the Tracy profiler to help visualize what my rendering code was doing; specifically I was interested in scheduling when CPU code would generate commands for the GPU to execute to make sure that it was happening when I wanted it to. I raised the question of why not generate GPU commands even earlier to make sure that they were ready in time and discussed why doing so would work with predetermined content (like video, for example), but would be less ideal for an interactive experience (like a game) because it would increase latency between the user providing input and the results of that input being visible on the display.

This post will show the work I have done to implement some user input that can influence the game simulation, and how that interacts with rendering frames for the display.

How to Coordinate Display Frames with Simulation Updates

A traditional display changes the image that it displays at a fixed rate, called the “refresh rate”. As an example, the most common refresh rate is 60 Hz, where “Hz” is the symbol for Hertz, a unit of frequency, which means that a display with a fixed refresh rate of 60 Hz is changing the image that it displays 60 times every second. This means that a program has to generate a new image and have it ready for the display 60 times every second. There is a fixed deadline enabled by something called vsync, and this puts a constraint on how often to render an image and when. (For additional details about dealing with vsync and timing, see the post Syncing without Vsync. For additional details about how to schedule the generation of display frames for vsync see the post that I have already referred to, Frame Pacing in a Very Simple Scene.)

Just because the display is updating at some fixed rate, however, doesn’t mean that the rest of the game needs to update at that same fixed rate, and it also doesn’t mean that the rest of the game should update at that same fixed rate. There is a very well-known blog post that discusses some of the reasons why, Fix Your Timestep!, which is worth reading if you haven’t already. In my own mental model the way that I think about this is that I want to have an interactive experience that is, conceptually, completely decoupled from the view that I generate in order to let the user see what is going on. The game should be able, conceptually, to run on its own with no window, and the player could also pick up a controller and influence that game, and whatever happens should happen the same way regardless of what it would look like to the player if there were a window (and regardless of what refresh rate, resolution, or anything else the player’s display happens to have).

My challenge since the last post, then, has been to figure out how to actually implement this mental model.

Display Loop

I am trying to design my engine to be platform-independent but the only actual platform that I am programming for currently is Windows; this means that behind all of the platform-independent interfaces there is only a single platform-specific implementation and it is for Windows. On Windows there is a very tight coupling between a window and a D3D12 swap chain, and there is also a very tight coupling between any window and the specific thread where it was created. Because of this, my current design is to have what I am calling the “display thread”, and this thread is special because it handles the main window event queue (meaning the events that come from the Windows operating system itself). This is also the thread where I submit the GPU render commands and requests to swap (for the swap chain) and that means that there is a built-in cadence in this thread that matches the fixed refresh rate.

What I needed to figure out was how to allow there to be a separate fixed update rate for the game simulation, which could either be faster or slower than the display refresh rate, and how to coordinate between the two. The strategy that was initially obvious to me was to have a separate thread that handled the simulation updates and then to have the display thread be able to coordinate with this simulation thread. I think that this would work fine (many games have a “game thread” and a “render thread”), but there is an aspect of this design that made me hesitate to implement it this way.

My impression is that over the past several years games have been moving away from having fixed threads with specific responsibilities and instead evolving to be more flexible where “jobs” or “tasks” can be submitted to any available hardware threads. The benefit of this approach is that it scales much better to any arbitrary number of threads. I have read about engines that don’t have a specific “game thread” or “render thread”, and the idea is appealing to me on a conceptual level. Although I don’t have any job system in place yet I feel like this is the direction that I at least want to aspire to, and that meant that I had to avoid my initial idea of having a separate simulation thread with its own update cadence.

Instead, I needed to come up with a design that would allow something like the following:

- It can be calculated which display frame needs to be rendered next, and what the time deadline is for that to be done

- Based on that, it can be calculated which simulation updates must happen in order to generate that display frame

- Based on that, some threads can update the simulation accordingly

- Once that is done some threads can start to generate the display frame

This general idea gave me a way to approach implementing simulation updates at some fixed rate that coordinated with display updates at some other fixed rate, even though I only have a single thread right now and no job system. Or, said another way, if I could have different multiple simultaneous (but arbitrary and changeable) update cadences in a single thread then it might provide a natural way forward in the future to allow related tasks to run on different threads without having to assign specific fixed responsibilities to specific fixed threads.

Interpolation or Extrapolation?

There is something tricky that I don’t address in the simplified heuristic above: How can it be known which simulation updates must be done before a display frame can be generated? At a high level there are three options:

- Make the simulation and display tightly coupled. I already discussed why this is not what I want to do.

- Simulate as much as possible and then generate a display frame that guesses what will happen in the future. This involves taking known data and extrapolating what will happen next.

- Simulate as much as possible and then generate a display frame of what has already happened in the past. This involves taking known data and interpolating what has already happened.

For months I have been fascinated with #2, predicting what will happen in the future and then extrapolating the rendering accordingly. I am not personally into fighting games but somewhere I heard or read about “rollback netcode” and then started reading and watching as much as I could about it. Even though I have no intention of making a network multiplayer game I couldn’t shake the thought that the same technique could be used in a single-player game to virtually eliminate latency. I ultimately decided, however (and unfortunately), that this wasn’t the way to go because I wasn’t confident in my ability to deal with mispredictions in a way that wouldn’t be distracting visually. My personal priorities are for things to look good visually and less about reducing latency or twitch gaming, which means that if there is a tradeoff to be made it will usually be to increase visual quality. (With that being said, if you are interested I think that my single favorite source that I have found about these ideas is https://www.youtube.com/watch?v=W3aieHjyNvw. Maybe at some point in the future I will have enough experience to try tackling this problem again.)

That leaves strategy #3, interpolation. This means that in order to start generating a frame to display I can only work with simulation updates that have already happened in the past, and that I need to figure out when these required simulation updates have actually happened so that I can then know when it is ok to start generating a display frame.

Below is a capture I made showing visually what I mean by interpolation:

The two squares are updating at exactly the same time (once each second), and they have identical horizontal positions from the perspective of the simulation. The top square, however, is rendering with interpolation such that every frame that is generated for display places the square where the simulation would have been at some intermediate point between discrete updates. This gives the illusion of smooth motion despite the simulation only updating once each second (the GIF makes the motion look less smooth, but you can download and try one of the sample programs to see how it looks when interpolating at your display’s actual refresh rate).

Notice in the GIF how this interpolation scheme actually increases latency: The bottom square shows the up-to-date simulation, and the top square (what the user actually sees) is showing some blended state between the most up-to-date position and then the previous, even older, position. The only time that the user sees the current state of the simulation is at the moment when the simulation is the most out-of-date, immediately before it is about to be updated again. For a slow update rate like in the GIF this delay is very noticeable, but on the opposite extreme if the simulation is updating faster than the display rate then this is less of a problem. You can download and try the sample programs to find out how sensitive you are to this latency and how much of a difference you can personally notice between different simulation update rates.

The (Current) Solution

I have come up with two variables that determine the behavior of a simulation in my engine and how it gets displayed:

- The (fixed) duration of a simulation update, in milliseconds

- The (fixed) cutoff time before a display refresh, in milliseconds, when a display frame will start to be generated

This was a surprisingly (embarrassingly!) difficult problem for me to solve especially given how simple the solution seems, and I won’t claim that it is the best solution or that I won’t change the strategy as time goes on and I get more experience with how it works. Let me elaborate on the second point, though, since that was the tricky part (the first point is obvious and so there is not much to discuss).

I knew that I wanted the simulation to run independently of the display. Said another way, the simulation shouldn’t even know or care that there are any frames being displayed, and the framerate of the display should never have any influence on the simulation. Note that this also extends to user input: The same input from a user should have the same effect on the simulation regardless of what framerate that user’s display has.

On the other hand, it seemed like it was necessary to have the display frames have some kind of dependency on the simulation: In order to interpolate between two simulation frames there has to be some kind of offset involved where the graphics system knows how far between two frames it is. My brain kept thinking that maybe the offset needed to be determined by how long the display’s refresh period is, but I didn’t like that because it seemed to go against my goal of different players having the same experience regardless of refresh rate.

Finally I realized that it could be a (mostly) arbitrary offset from the vertical blank. What this represents conceptually is a tradeoff between latency (a smaller time is better) and computational requirements (it must be long enough that the CPU and GPU can actually generate a display frame before the vblank happens). Having it be a fixed offset, however, means that it will behave mostly the same regardless of what the display is doing. (There is still going to be some system-dependent latency: If DWM is compositing then there is an inherent extra frame of latency, and then there is some unpredictable time from when the GPU scans the frame out until it is actually visible on the display which is dependent on the user’s actual display hardware and how it is configured. What this current solution seems to do, however, is to minimize the effects of display refresh rate in the software domain that I have control over.)

Visualizing in a Profiler

In order to understand how the simulation is scheduled relative to rendering we can look at Tracy profile captures. This post won’t explain the basics of how to interpret these screenshots, but you can refer to this previously-mentioned earlier post for a more thorough explanation.

In all of the screenshots in this post my display refresh rate is set to 60 Hz which means that a generated image is displayed for 16.67 milliseconds (i.e. 1000 / 60 ms). I have set the time before the vblank to start rendering to be half of that, 8.33 ms. Note that this doesn’t mean that this time is in any way meaningful or that it is ideal! Instead, I have deliberately chosen this time to make it easier for you as a reader to envision what is happening; the cutoff for when input for simulation frames is no longer accepted is always going to be at the halfway point between display frames.

Remember: The cutoff for user input in these screenshots is arbitrarily chosen to be exactly halfway between display frames.

100 Hz Simulation

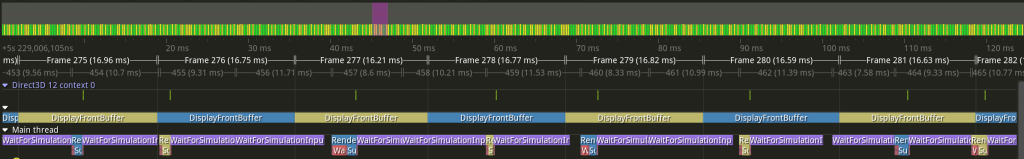

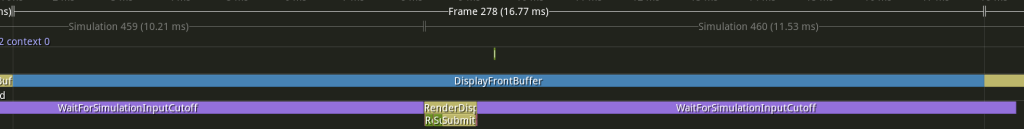

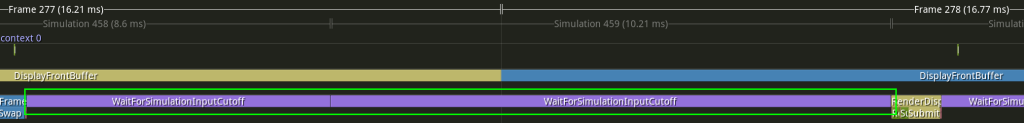

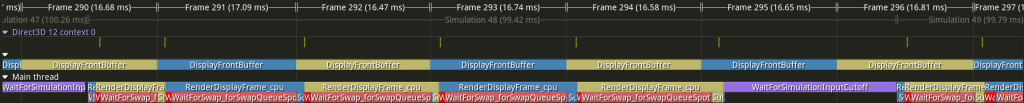

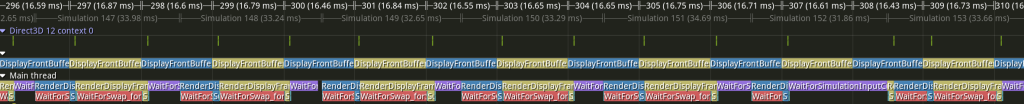

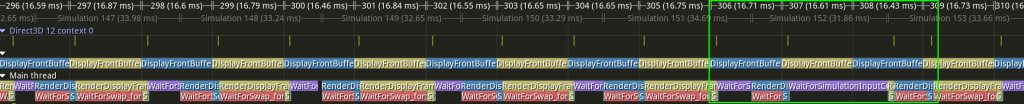

This first example has the simulation updating at 100 Hz, which means that each simulation update represents 10 ms (i.e. 1000 / 10):

As in the previous post, the DisplayFrontBuffer zones show when the display is changing, and it corresponds to the unnamed “Frames”, which each take 16.67 ms.

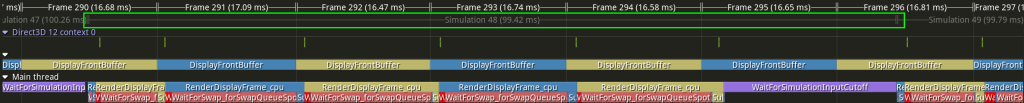

New in this post is a second kind of frame, which I’ve highlighted in green below:

This shows where the simulation frames are happening, and each lasts roughly 10 ms. In the previous two screenshots I have the default display frames selected, but Tracy also allows the other frames to be selected in which case it will show those frames at the top:

Each display frame takes 16.67 ms and each simulation frame takes 10 ms, and since these are not clean multiples the way that they line up is constantly varying. The following facts can help to understand and interpret these profile screenshots:

- The display frames shown are determined by a thread waiting for a vblank, and so this cadence tends to be quite regular and close to the ideal 16.67 ms

- The simulation frames shown are determined by whenever a call to Windows’s

Sleep()function returns, and more specifically whenever a call toSleep()returns that is after the required cutoff for input. This means that the durations shown are much more variable, and although helpful for visualization they don’t actually show the real simulation framesSleep()is notoriously imprecise; I have configured the resolution to be 1 ms, and newer versions of Windows have improved the call to behave more closely to how one might expect (see also here), but it is still probably not the best tool for what I am using it for.- For the purposes of this post you just need to keep in mind that the end of each simulation updates shown in the screenshots is conservative: It is always some time after the actual simulation update has ended, but how long after is imprecise and (very) inconsistent.

- The simulation frames shown don’t include any work done to actually update the simulation. Instead they represent the timeline of logical simulation updates; one way to think of them is the window of time when the user can enter input to influence the following simulation update (although this mental model may also be confusing because it hints at additional latency between the user’s input and that input being used; we will ignore that for this post and focus more the relationship between the simulation and the display but it will be something that I will have to revisit when I work on input).

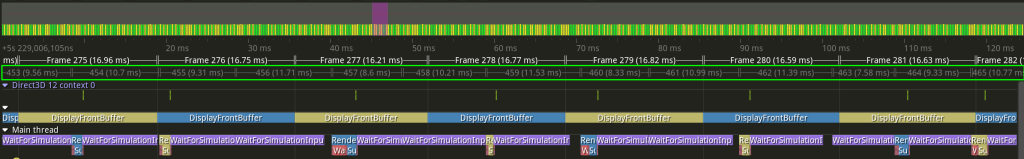

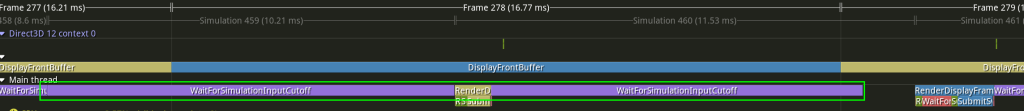

Let’s look at a single display frame, highlighted in green below:

The blue swap chain texture is currently being displayed (i.e. it is the front buffer), and so the yellow swap chain texture must be written to in order to be displayed next (i.e. it is the back buffer). The purple WaitForSimulationInputCutoff zones show how the 10 ms simulation updates line up with the 16.67 ms display updates. The cutoff that I have specified is 8.83 ms before the vblank, and so the last WaitForSimulationInputCutoff that we can accept for rendering must finish before the halfway point of that blue DisplayFrontBuffer zone. In this example that I have chosen the simulation frame ends a little bit before the halfway point, and then the work to generate a new display frame happens immediately. Here is a zoomed in version of that single display frame to help understand what is happening:

After the purple WaitForSimulationInputCutoff is done the CPU work to record and submit GPU commands to generate a display frame happens. In the particular frame shown in the screenshot there is no need to wait for anything (the GPU finished the previous commands long ago, and the yellow back buffer is available to be changed because the blue front buffer is being shown which means that recording and submission of commands can happen immediately). The GPU also does the work immediately (the little green zone above the blue DisplayFrontBuffer), and everything is ready to be displayed for the next vblank, where the yellow texture can now be swapped to become the front buffer.

The situation in this screenshot is probably the easiest one to understand: The yellow rendering work is generating a display frame about 8.33 ms before it has to be displayed. That display frame will show an interpolation between two simulation frames, and the last of those two simulation frames ended where that yellow rendering work begins.

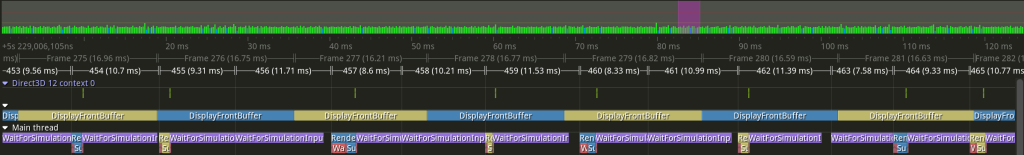

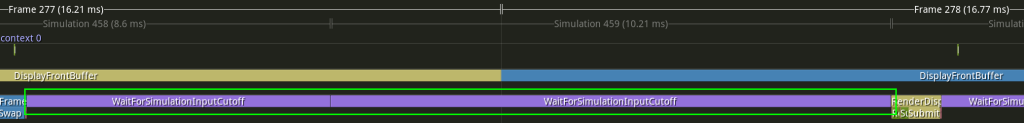

To try and make it more clear I’ve moved the timeline slightly earlier in the following screenshot so that we can see both simulation frames that are being used to generate the display frame, highlighted in green:

The yellow rendering work is now at the right of the screenshot (look at Frame 278 at the top to help orient yourself), and there are two purple WaitForSimulationInputCutoff zones highlighted. The yellow rendering work at the right of the screenshot is generating a display frame that contains an interpolation of some point in time between the first WaitForSimulationInputCutoff zone and the second WaitForSimulationInputCutoff zone.

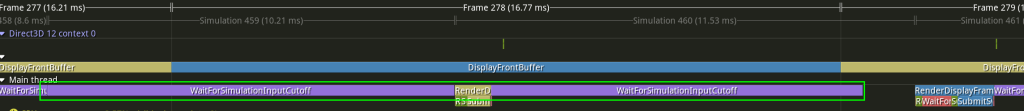

Let us now compare the next display frame, highlighted in green below:

Now the yellow texture is being displayed as the front buffer and the blue texture is the back buffer that must be modified. Because of the way that two different 16.67 ms and 10 ms cadences line up, however, the last simulation frame that can be used for this display frame ends relatively earlier, which means that rendering work can begin relatively earlier (note how the blue CPU work happens earlier in the display frame in the highlighted Frame 279 compared to where the yellow CPU work happened in the preceding Frame 278 that we looked at previously). The way that I think about this is to ask “when will the next simulation frame end?” In this case it would end after the 8.33 ms cutoff (at the midpoint of yellow Frame 279), and so it can’t be used for this display frame.

The two simulation frames that are being used to generate Frame 279 are shown below highlighted in green:

The ending simulation frame is new, but the beginning simulation frame is the same one that was used as the ending simulation frame for the previous display frame. These examples hopefully give an idea of how the display frames are being generated independently of the cadence of the simulation frames, and how they are showing some “view” into the simulation by interpolating between the two most recently finished simulation frames. The code uses the arbitrary cutoff before the vblank (8.33 ms in these screenshots) in order to decide 1) what the two most recently finished simulation frames are and 2) how much to interpolate between the beginning and ending simulation frames for a given display frame.

When interpolating, how can the program determine how far between the beginning simulation frame and the ending simulation frame the display frame should be? The amount to interpolate is based on how close the display frame cutoff is to the end of the simulation frames. This can be confusing for me to think about, but the key insight is that it is dependent on the simulation update rate. The way that I think about it is that I take the cutoff in question (which, remember, happens after the ending simulation frame) and then subtract the duration of a simulation update from that. Whatever that time is must fall between the beginning and ending simulation update, and thus determines the interpolation amount.

I’m not sure whether to spend more time explaining that or whether it’s obvious to most people and possibly just confusing to me for some reason. I think that what makes it tricky for me to wrap my head around is that the interpolation is between two things that have both happened in the past (whereas if I were instead doing extrapolation with one update in the past and one in the future then it would be much more natural for how my brain tries to think about it). Rather than belaboring the point I will repeat the GIF of the two moving squares, which I think is an easier way to understand visually how the interpolation is working:

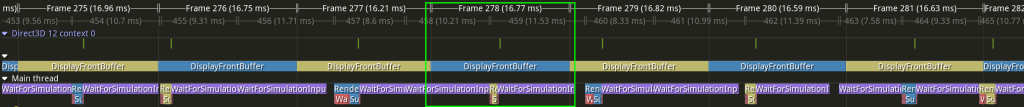

Let’s look again at the two simulation frames that each display frame uses, one after the other:

It is hard to say anything very precise about the interpolation amount because of the imprecision of the purple WaitForSimulationInputCutoff zones (because of the inherent imprecision of the Sleep() function), but we can at least make some generalizations using some intuitive reasoning:

- The earlier that the ending simulation update happens relative to the display frame cutoff, the more the interpolation amount will be biased towards the ending simulation update

- The closer that the ending simulation update happens relative to the display frame cutoff, the more the interpolation amount will be biased towards the beginning simulation update

With that in mind, in the first screenshot we can say that the yellow display frame work (which will be shown in Frame 279) is going to show something closer to the beginning simulation update (the first one highlighted in green); we can claim this because the ending simulation update ended so close to the 8.33 ms cutoff (halfway between the blue DisplayFrontBuffer zone), and if we subtract the 10 ms simulation update duration it is going to be pretty close to end of the beginning simulation update.

In the second screenshot, by comparison, we can say that the blue display frame work (which will be shown in Frame 280) is going to show more of the ending simulation update (the second one highlighted in green); we can claim this because the ending simulation updated ended quite some time before the 8.33 ms cutoff.

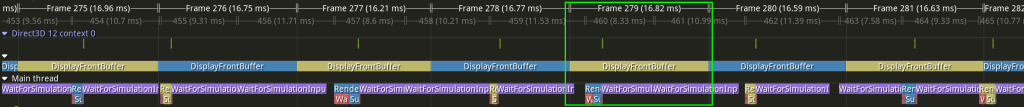

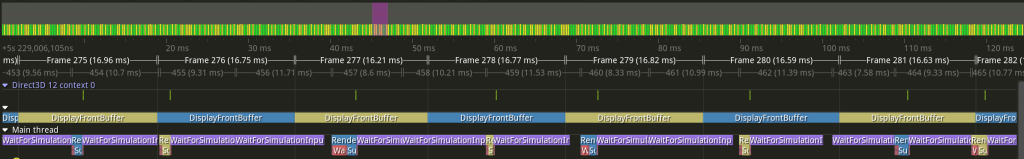

Before moving on to a different simulation update rate let’s look one more time at the original screenshot that shows many display and simulation frames:

This screenshot shows how the two different frame cadences line up at different points. It also shows how the rendering work starts being done as soon as it is possible, but that it isn’t possible until after the end of the last simulation update before the cutoff (again, remember that the cutoff is arbitrary but was chosen to be 8.33 ms so that it is easy to visualize as being at the halfway point of each display frame).

250 Hz

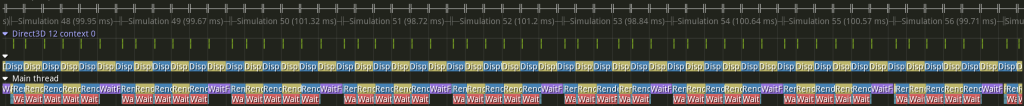

This next example has the simulation updating at 250 Hz, which means that each simulation update is 4 ms (i.e. 1000 / 250):

There are now more simulation updates for every display update (about 4, i.e. 16.67 ms / 4 ms), but otherwise this profile should look familiar if you were able to follow the previous discussion about 100 Hz. One interesting pattern is that the smaller the duration of simulation updates the more consistently closer to the halfway cutoff the display frame render work will be (you can imagine it as taking a limit as simulation updates get infinitesimally small, in which case the display frame render work would conceptually never be able to start early).

A high frequency of simulation updates like this would be the ideal way to run. The visual representation of the simulation would still be limited by the display’s refresh rate but the simulation itself would not be. Of course, the simulation is still constrained by how computationally expensive it is to update. In my example I’m just updating a single position using velocity and so it is no problem, but in a real game the logic and physics will take more time. You can download and try an example program to see how it feels to have the square’s position update at 250 Hz.

(I have also found that there are many more visual glitches/hitches running at this high frequency, meaning that display frames are skipped for various reasons. In my particular program this might be my fault, since the rendering work seems so simple (if I used a busy wait instead of Sleep(), for example, I might get better results), but it generally suggests that it probably isn’t possible to have such low latency with such a high refresh rate in a non-toy program. If I increase the cutoff time to give my code some buffer for unexpectedly long waits then things work much better, even at a high simulation update frequency.)

10 Hz

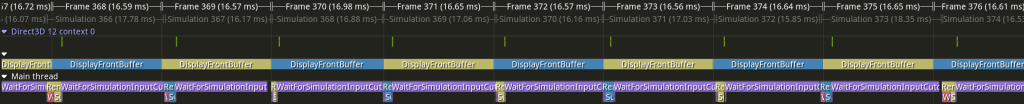

The next example has the simulation updating at 10 Hz, which means that each simulation update is 100 ms (i.e. 1000 / 10). Notably, this is a case of the opposite situation as the previous example: Rather than having many simulation updates for one display update this example has many display updates for one simulation update:

This example looks quite a bit different from the preceding two because the simulation update duration is so much longer than the display update duration. You can see that there are still purple WaitForSimulationInputCutoff zones, but they only occur when there is an actual need to wait, and that only happens when a simulation update is going to end during a display refresh period.

To make this more clear I have highlighted the actual simulation frame below in green:

Note that the simulation update itself is quite long, and the purple WaitForSimulationInputCutoff zone only happens at the end. If a display frame must be generated but the two required simulation updates that it is going to interpolate between have already happened then there is no need to wait for another one.

On the other hand, we do see new waits that are much more obvious than they were in the previous examples. These are the reddish waits, where the most obvious one is WaitForSwap_forSwapQueueSpot. I apologize for the awkward name (I have been trying different things, and I’m not in love with this one yet), but it is waiting for the currently-displayed front buffer to be swapped so that it no longer displayed and can be modified as a back buffer. Rendering happens so quickly that a very large percentage of the display frame is spent waiting until the back buffer texture is available, after which (when the DisplayFrontBuffer color switches) some CPU work and then GPU work is done very quickly, and the waiting starts again (for additional information see the previous post that I have already referenced, although it was just called “WaitForSwap” there). In a real renderer with real non-trivial work to be done there would be less waiting, but that is dependent on how long it takes to record GPU commands (before the vblank) and how long it takes to submit and execute those commands (after the vblank).

Although it is hard to see any details it is also instructive to zoom out more and see what it looks like over the course of several simulation updates:

There should be 6 display frames for every simulation update, and this can be seen in the screenshot.

This 10 Hz rate of simulation update is too slow to be satisfying; you can download a sample program and try it to see what it feels like, but it is pretty sluggish. Still, it is good news that this works because it is definitely possible that a program might update its display faster than its simulation and so trying an extreme case like this is a good test.

60 Hz

This next example has the simulation updating at 60 Hz, which means that each simulation update is 16.67 ms (i.e. 1000 / 60). More notably, it means that the simulation is updating at exactly the same rate as the display:

The display frame cutoff is still 8.33 ms, halfway between vblanks, but since the simulation and display update at the same time the simulation update ends close to a vblank and so the display frame work is able to happen early, right after a vblank.

(Since the simulation is happening on the CPU there is probably some small real-world difference between the CPU clock and the display refresh rate which has its own display hardware clock.. If I let this program run long enough there is probably some drift that could be observed and eventually the simulation updates would probably end closer to the cutoff (and then drift again, kind of going in and out of phase). Relatedly, I could probably intentionally make it not line up when the program starts if I put some work into it; the alignment is most likely an accidental result of how and when I am starting the simulation which is probably coincident with some display frame.)

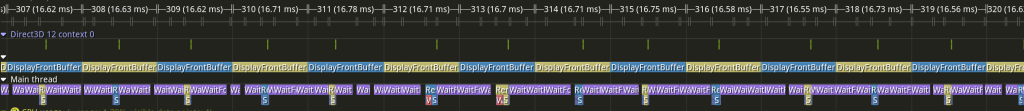

30 Hz

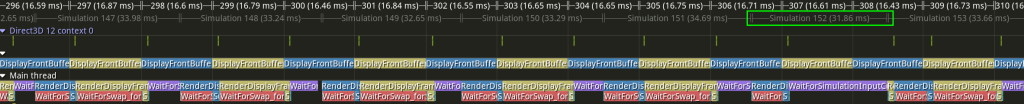

This next example has the simulation updating at 30 Hz, which means that each simulation update is 33.33 ms (i.e. 1000 / 30), and also that the display updates twice for every single simulation update:

This shows what we would have expected: There are two display frames for every one simulation update, and that means that there is only a purple WaitForSimulationInputCutoff zone in the display frame where a simulation update is ending before the halfway 8.33 ms cutoff.

There is one part of the screenshot, however, that is interesting and perhaps surprising, during display Frame 307. I’ve highlighted it in green below:

The previous pattern suddenly changes, and there is an unusually long WaitForSimulationInputCutoff zone. It kind of looks like there was a missed display frame (which can happen, although I haven’t shown it in this post; the program can fail to render a display frame in time and then needs to recover), but if we look at the pattern of alternating blue and yellow in the Main thread this isn’t actually the case.

The key to understanding what is happening is to look at the simulation frames rather than focusing on the display frames:

If you look at Simulation 152 and how it lines up with the work in the Main thread you may be able to figure out what is going on. This is an example of things (expectedly) going out of phase, and the code (correctly) dealing with it. If you compare Simulation 152 to the preceding Simulation 151 and subsequent Simulation 153 you can see that nothing unusual happened, and the simulation updates are happening approximately every 33.33 ms, as expected. What is unusual, however, is that Simulation 152 is ending right before the halfway cutoff for simulation updates, and so that yellow rendering work (for display frame 309) can’t start until Simulation 152 has finished. In the context of this screenshot it looks very scary because there is only 8.33 ms for the entire display frame to be generated, but this is the exact cutoff that I have chosen, meaning that I have told the program “don’t worry, I can do everything I need to in 8.33 ms and so use that as the cutoff for user input”. All of the other display frames in the screenshot have much more time (they have to wait for the front buffer to be swapped before they can even submit GPU commands), but this particular instance is doing exactly what I have told it to do, and is just a curious result of the different clocks of the CPU and display not being exact (which, again, is expected). (Notice that immediately after the yellow work with the tight deadline there is blue work, which puts the graphics system back on the more usual schedule where it is done ahead of time and can wait.)

Example Programs

I have made some example programs that have different simulation update rates but are otherwise identical. You can download them here.

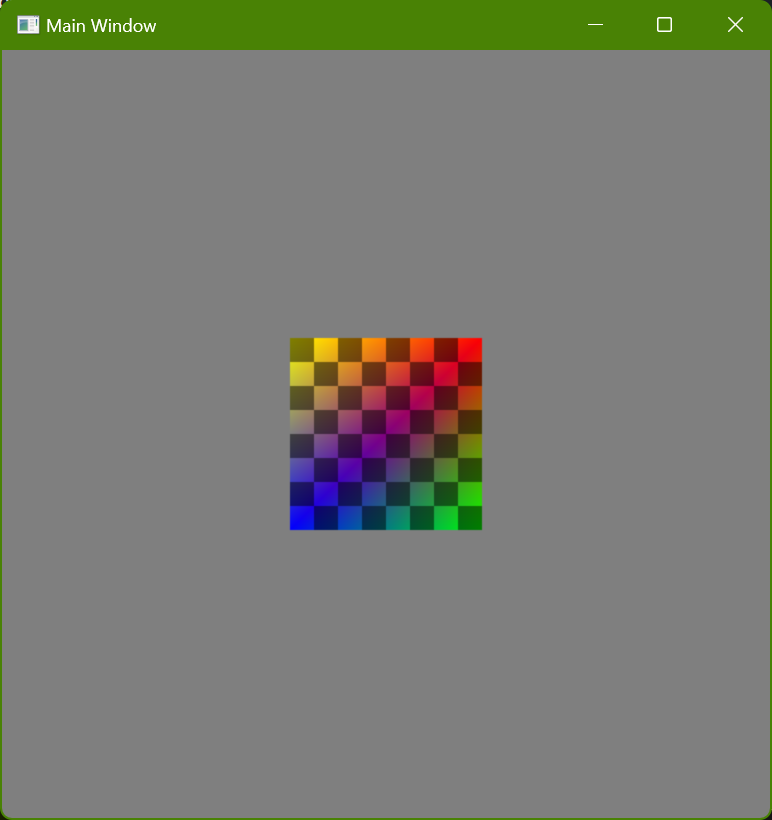

Running one of the sample EXEs will, if it doesn’t crash 🙃, show a window that looks like this:

You can move the square using the arrow keys.

The square always moves in discrete steps, at whatever frequency the EXE filename indicates. Visually, however, the displayed frames use interpolation, as discussed in this post. (There are two alternate versions of the slowest update frequencies that don’t do any interpolation; these can be useful to run and compare with the versions that do use interpolation in order to better understand what is happening and why the slow frequencies feel kind of weird and sluggish, even though they look smooth.)

The cutoff before the vblank is set to 10 ms. This is slightly longer than all of the screenshots used in this post (that used 8.33 ms), but it is still small enough that glitches aren’t unusual at the higher simulation update frequencies. In a real program that is doing serious rendering work more time would probably be needed, but for the purposes of these demonstrations I wanted to try and minimize latency.

These programs can be fun to run and see how sensitive you personally are to latency. Try not to focus on any visual hitches (where e.g. a frame might be skipped), but instead try to concentrate on whether it feels like the square responds instantly to pressing a key or releasing it, or whether it seems like there is a delay. Some things to try:

- Really quick key presses. I generally use the same direction and then tap a rapid staccato pattern. This is what I personally am most sensitive to where the higher frequencies feel better.

- Holding down a key so the square moves with some constant velocity and then releasing it. I personally am not bothered as much by a delayed start (I know that some people are) as I am by a delayed stop (where the square keeps moving after I’ve let go of a key).

- At the lowest frequencies (5 Hz and 10 Hz) move the square close to the edge of the window and then try to tap quickly to move it in and out, meaning where a grey border is visible and when it isn’t. If you do this using the versions without interpolating it becomes pretty easy to intuitively understand how the square is moving in discrete steps (because there is a fixed velocity used with the fixed simulation timesteps), and then if you try the same thing with interpolation you can observe the same thing. Even though it looks smooth the actual behavior is tied to a grid, both spatially and temporally, and that’s a large part of why it feels weird (and why sometimes one key press might feel better or worse than another key press, depending on how much that key press aligns with the temporal “grid”).