I have recently integrated the Tracy profiler into my engine and it has been a great help to be able to visualize how the CPU and GPU are interacting. Even though what is being rendered is as embarrassingly simple as possible there were some things I had to fix that weren’t behaving as I had intended. Until I saw the data visualized, however, I wasn’t aware that there were problems! I have also been using PIX for Windows, NSight, RenderDoc, and gpuview, but Tracy has really been useful in terms of presenting the information across multiple frames in a way that I can customize to see the relationships that I have wanted to see. I thought that it might be interesting to post about some of the issues with screenshots from the profiler while things are still simple and relatively easy to understand.

Visualizing Multiple Frames

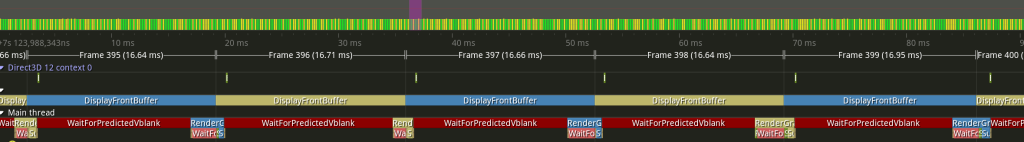

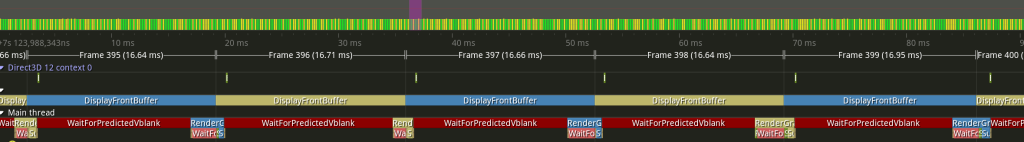

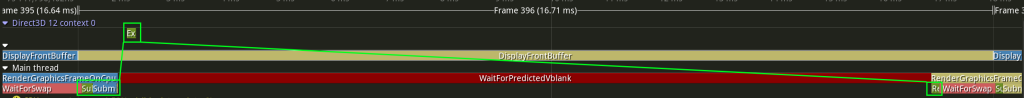

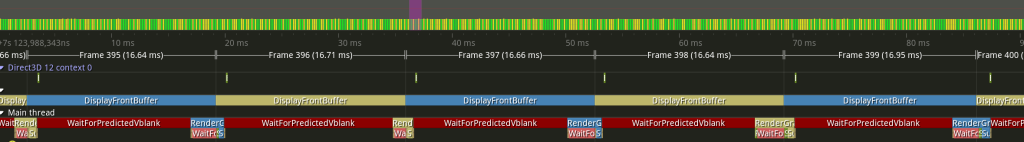

Below is a screenshot of a capture from Tracy:

I have zoomed in at a level where 5 full frames are visible, with a little bit extra at the left and right. You can look for Frame 395, Frame 396, Frame 397, Frame 398, and Frame 399 to see where the frames are divided. These frame boundaries are explicitly marked by me, and I am doing so in a thread dedicated to waiting for IDXGIOutput::WaitForVBlank() and marking the frame; this means that a “frame” in the screenshot above indicates a specific frame of the display’s refresh cycle.

There is a second frame visualization at the top of the screen shot where there are many green and yellow rectangles. Each one of those represents the same kind of frames that were discussed in the previous paragraph, and the purple bar shows where in the timeline I am zoomed into (it’s hard to tell because it’s so small but there are 7 bars within the purple section, corresponding to the 1 + 5 + 1 frames visible at the level of zoom).

In addition to marking frames Tracy allows the user to mark what it calls “zones”. This is a way to subdivide each frame into separate hierarchical sections in order to visualize what is happening at different points in time during a frame. There are currently three threads shown in the capture:

- The main thread (which is all that my program currently has for doing actual work)

- An unnamed thread which is the vblank heartbeat thread

- GPU execution, which is not a CPU thread but instead shows how GPU work lines up with CPU work

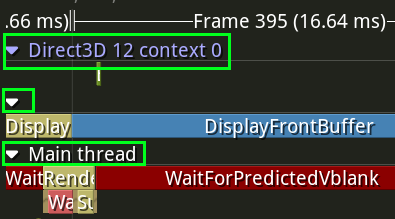

I’ve highlighted those three threads in green in the screenshot below:

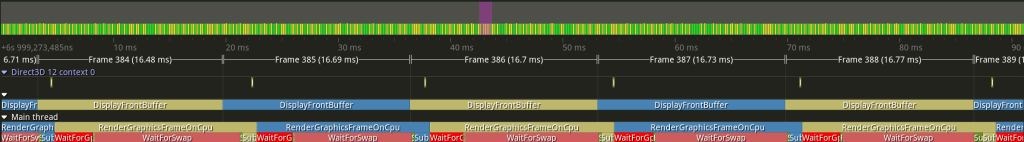

In order to try and help me make sure that I was understanding things properly I have color coded some zones specifically according to which swap chain texture is relevant. At the moment my swap chain only has two textures (meaning that there is only a single back buffer at any one time and the two textures just get toggled between being the front buffer or back buffer any time a swap happens) and they are shown with DarkKhaki and SteelBlue. In the heartbeat thread the DisplayFrontBuffer zone is colored according to which texture is actually being displayed during that frame (actually this is not true because of the Desktop Windows Manager compositor, but for the purposes of this post we will pretend that it is true conceptually).

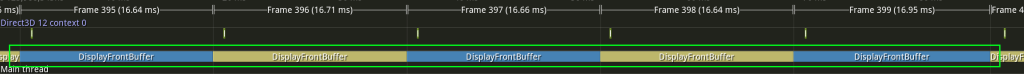

I’ve highlighted the alternating colors of DisplayFrontBuffer for each frame in green in the screenshot below, which you can use to see which swap chain texture is being displayed during each frame:

I have used the same colors in the main CPU thread to show which swap chain texture GPU commands are being recorded and submitted for. In other words, the DarkKhaki and SteelBlue colors identify a specific swap chain texture, the heartbeat thread shows when that texture is the front buffer, and the main thread shows when that texture is the back buffer. At the current level of zoom it is hard to read anything in the relevant zones but the colors at least give an idea of when the CPU is doing work for a given swap chain texture before it is displayed.

I’ve highlighted the alternating colors of RenderGraphicsFrameOnCpu for each frame in green in the screenshot below, which you can use to see which swap chain texture will be modified during each frame:

Unfortunately for this post I don’t think that there is a way to dynamically modify the colors of zones in the GPU timeline (instead it seems to be a requirement that are known at compile time) and so I can’t make the same visual correspondence. From a visualization standpoint I think it would be nice to show some kind of zone for the present queue (using Windows terminology), but even without that it can be understood implicitly. I will discuss the GPU timeline more later in the post when things are zoomed in further.

With all of that explanation let me show the initial screenshot again:

Hopefully it makes some kind of sense now what you are looking at!

Visualizing a Single Frame

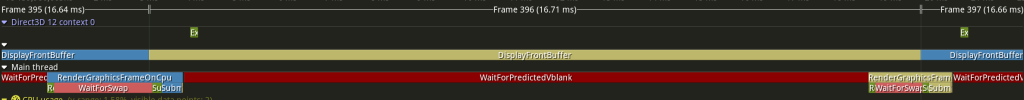

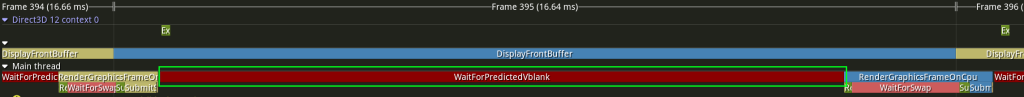

Let us now zoom in further to just look at a single frame:

During this Frame 326 we can see that the DarkKhaki texture is being displayed as the front buffer. That means that the SteelBlue texture is the back buffer, which is to say that it is the texture that must be modified so that it can then be shown during Frame 327.

Look at the GPU timeline. There is a very small OliveDrab zone that shows work being done on the GPU. That is where the GPU actually modifies the SteelBlue back buffer texture.

Now look at the CPU timeline. There is a zone called RenderGraphicsFrameOnCpu which is where the CPU is recording the commands for the GPU to execute and then submitting those commands (zones are hierarchical, and so the zones below RenderGraphicsFrameOnCpu are showing it subdivided even further). The color is SteelBlue, and so these GPU commands will modify the texture that was being displayed in Frame 395 and that will again be displayed in Frame 397. You may notice that this section starts before the start of Frame 396, while the SteelBlue texture is still the front buffer and thus is still being displayed! In order to better understand what is happening we can zoom in even further:

Compare this with the previous screenshot. This is the CPU work being done at the end of Frame 365 and the beginning of Frame 366, and it is the work that will determine what is displayed during Frame 367.

The work that is done can be thought of as:

- On the CPU, record some commands for the GPU to execute

- On the CPU, submit those commands to the GPU so that it can start executing them

- On the CPU, submit a swap command to change the newly-modified back buffer into the front buffer at the next vblank after all GPU commands are finished executing

- On the GPU, execute the commands that were submitted

It is important that the GPU doesn’t start executing any commands that would modify the SteelBlue swap chain texture until that texture becomes the back buffer (and is no longer being displayed). The WaitForSwap zone shows where the CPU is waiting for the swap to happen before submitting the commands (which triggers the GPU to start executing the commands). There is no reason, however, that the CPU can’t record commands ahead of time, as long as those commands aren’t submitted to the GPU until the SteelBlue texture is ready to be modified. This is why the RenderGraphicsFrameOnCPU zone can start early: It records commands for the GPU (you can see a small OliveDrab section where this happens) but then must wait before submitting the commands (the next OliveDrab section).

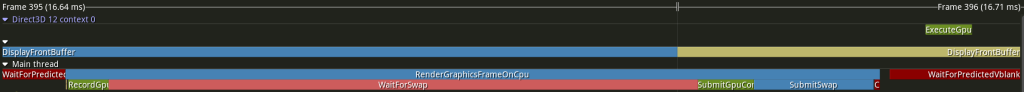

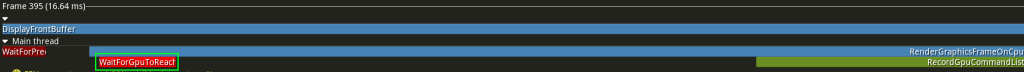

How early can the CPU start recording commands? There are two different answers to this, depending on how the application works. The simple answer (well, “simple” if you understand D3D12 command allocators) is that recording can start as soon as the GPU has finished executing the commands that were previously submitted that were saved in the memory that the new recording is going to reuse. There is a check for this in my code that is so small that it can only be seen if the profiler is zoomed in even further

I’ve highlighted the WaitForGpuToReachSwap zone in green in the screenshot below, which shows where the CPU made sure that it was ok to start recording commands for the GPU:

The reason that this wait is so short is because the GPU work being done is so simple that it reached the submitted swap long before the CPU checked to make sure.

I’ve highlighted the path from submitting GPU commands on the CPU to executing GPU commands to waiting for GPU commands to finish executing on the CPU in green in the screenshot below:

Do you see that long line between executing the GPU commands and then recording new ones on the CPU? With the small amount of GPU work that my program is currently doing (clearing the texture and then drawing two quads) there isn’t anything to wait for by the time I am ready to start recording new commands.

If you’ve been following you might be asking yourself why I don’t start recording GPU commands even sooner. Based on what I’ve explained above the program could be even more efficient and start recording commands as soon as the GPU was finished executing the previous commands, and this would definitely be a valid strategy with the simple program that I have right now:

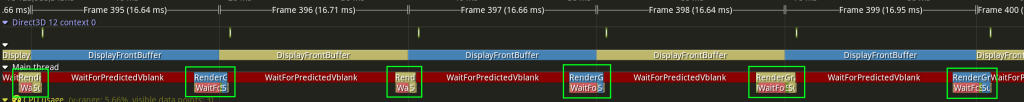

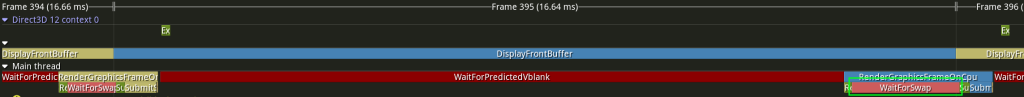

This is a capture that I made after I modified my program to record new GPU commands as soon as possible. The WaitForPredictedVblank zone is gone, the WaitForGpuToReachSwap zone is now visible at this level of zoom, and the WaitForSwap zone is now bigger. The overlapping of DarkKhaki and SteelBlue is much more pronounced because the CPU is starting to work on rendering a new version of the swap chain texture as soon as that swap chain texture is displayed to the user as a front buffer (although notice that the commands still aren’t submitted to the GPU until after the swap happens and the texture is no longer displayed to the user). Based on my understanding this kind of scheduling probably represents something close to the ideal situation if 1) a program wants to use vsync and 2) knows that it can render everything fast enough within one display refresh and 3) doesn’t have to worry about user input.

The next section explains what the WaitForPredictedVblank is for and why user input makes the idealized screenshot above not as good as it might at first seem.

When to Start Recording GPU Commands

Earlier I said that there were two different answers to the question of how early the CPU can start recording commands for the GPU. In my profile screenshots there is a DarkRed zone called WaitForPredictedVblank that I haven’t explained yet, but we did observe that it could be removed and that doing so allowed even more efficient scheduling of work. This WaitForPredictedVblank zone is related to the second alternate answer of when to start recording commands.

I’ve highlighted the WaitForPredictedVblank zone in green in the screenshot below:

My end goal is to make a game, which means that the application is interactive and can be influenced by the player. If my program weren’t interactive but instead just had to render predetermined frames as efficiently as possible (something like a video player, for example) then it would make sense to start recording commands for the GPU as soon as possible (as shown in the previous section). The requirement to be interactive, however, makes things more complicated.

The results of an interactive program are non-deterministic. In the context of the current discussion this can be thought of as an additional constraint on when commands for the GPU can start being recorded, which is so simple that it is kind of funny to write out: Commands for the GPU to execute can’t start being recorded until it is known what the commands for the GPU to execute should be. The amount of time between recording GPU commands and the results of executing those commands being displayed has a direct relationship to the latency between a user providing input and the user seeing the result of that input on a display. The later that the contents of a rendered frame are determined the less latency the user will experience.

All of that is a long way of explaining what the WaitForPredictedVblank zone is: It is a placeholder in my engine for dealing with game logic and simulation updates. I can predict when the next vblank is (see the Syncing without VSync post for more details), and I am using that as a target for when to start recording the next frame. Since I don’t actually have any work to do yet I am doing a Sleep() in Windows, and since the results of sleeping have limited precision I only sleep until relatively close to the predicted vblank and then wait on the more reliable swap chain waitable object (this is the WaitForSwap zone):

(Side note: Being able to visualize this in the instrumented profile gives more evidence that my method of predicting when the vblank will happen is pretty reliable, which is gratifying.)

The next step will be to implement simulation updates using fixed timesteps and then record GPU commands at the appropriate time, interpolating between the two appropriate simulation updates. That will remove the big WaitForPredictedVblank, and instead there will be some form of individual simulation updates which should be visible.

Conclusion

If you’ve made it this far congratulations! I will show the initial screenshot one more time, showing the current state of my engine’s rendering and how work for the GPU is scheduled, recorded, and submitted:

There is a follow-up post about adding simulation frames here.